Convolutional Neural Network-based Multi-Classification of Skin Disease with Fine-Tuned ResNet50 and VGG16

Abstract

Introduction

Skin disorders present a significant global public health concern, affecting millions of individuals and contributing to high morbidity rates. The application of artificial intelligence, particularly deep learning, has emerged as a promising avenue for the automated classification and detection of skin diseases, offering potential improvements in accuracy, speed, and cost-effectiveness in dermatological diagnostics.

Methods

This study aims to evaluate the performance of convolutional neural networks (CNNs) in classifying skin diseases. Four widely recognized architectures—ResNet50, InceptionV3, EfficientNetB0, and VGG16—were implemented and compared using a dataset comprising 1,159 dermoscopy images across eight disease categories. Models were trained using the Adam optimizer with a batch size of 32 over 20 epochs. Performance metrics were assessed and benchmarked against findings from existing literature.

Results

Among the evaluated models, EfficientNetB0 achieved the highest precision at 96.76%, followed by InceptionV3 and ResNet50 with accuracies around 93.5%. VGG16 demonstrated the lowest performance, achieving an accuracy of 84.32%. These results indicate that EfficientNetB0 offers superior feature extraction and generalization capabilities for dermatological image classification.

Discussion

The findings suggest that recent CNN architectures, particularly EfficientNetB0, can significantly enhance the accuracy of skin disease classification. These improvements may facilitate more effective and scalable diagnostic tools in dermatology. Limitations of this study include the relatively small dataset and limited class diversity, which may affect model generalizability.

Conclusion

EfficientNetB0 outperformed ResNet50, InceptionV3, and VGG16 in classifying skin diseases, highlighting its potential for clinical application. Future research should focus on expanding datasets, refining model architectures, and deploying automated skin disease screening systems in real-world healthcare settings.

1. INTRODUCTION

A person's state of health encompasses physical, social, and mental well-being. Living a healthy lifestyle enables us to lead a life full of meaning and purpose [1]. When the body, mind, and spirit are in harmony, people can enjoy a fruitful life, which is characterized by a state of equilibrium. Therefore, understanding and prioritizing health is paramount for individuals, communities, and societies as a whole. The biggest organ in the human body, the skin, acts as a barrier between the body's internal organs and the outside world. Skin protects the body from harmful elements in the outside world, boosts the body's immunity, and regulates body temperature [2]. Various factors like smoking, drinking, infections, and UV radiation aggravate skin problems, which have an impact on the skin. Latent bacteria can cause skin problems, including fungal growth, germs, and allergic reactions, which alter the skin’s texture or pigmentation, all of which contribute to skin illnesses [3]. The British Skin Foundation reports that around 60 percent of the UK population has or has had skin issues. Every year, the UK records at least 100,000 new instances of skin cancer. People of different ages are being affected by skin conditions. Skin infections can cause symptoms, including a burning sensation, redness, swelling, or itchiness.

Furthermore, allergic reactions can also occur, leading to rashes that affect the appearance of the skin [4]. The three primary layers of the human skin are: the dermis, hypodermis, and epidermis. These aforementioned layers are all prone to illnesses that might prove fatal [3].

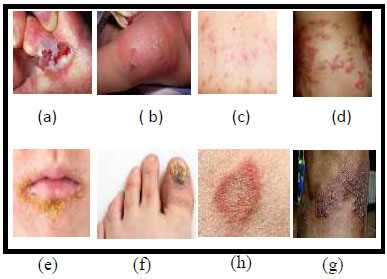

It has been proven that skin conditions might negatively affect a person's ability to enjoy life fully. Dermatology is the field that deals with problems related to the skin, hair, and nails. The combination of clinical screening and dermatological analysis is necessary to accurately diagnose skin diseases. Dermatological disorders affect patients’ self-esteem as they experience depression and anxiety, which leads to psychological effects [5]. Humans face a wide range of skin diseases, including Athlete's Foot (Fig. 1a), Cellulitis (Fig. 1b), Chickenpox (Fig. 1c), Cutaneous Larva Migrans (Fig. 1d), Impetigo (Fig. 1e), Nail Fungus (Fig. 1f), Ringworm (Fig. 1g), and Shingles (Fig. 1h) [6].

The human nervous system has inspired many significant achievements in deep learning. With a rapid increase in the amount of biomedical data, including both images and medical records, deep learning has shown remarkable success in solving medical image processing issues. Advancements in laser and photonics-based technologies for medical applications have led to rapid and accurate diagnosis of skin disorders. However, the cost and accessibility of such an evaluation remain confined [7]. So, this problem can be solved using DL methods whose CNN is the foundation of computer vision systems and has substantially changed the field of image processing. CNN performs exceptionally well on image classification problems since it was designed to automatically learn hierarchical patterns and features from incoming images without the need for explicit characteristics. The fully connected, pooling, and convolutional layers that comprise CNN models are interwoven together. As a result, CNN is able to comprehend images in a hierarchical manner, accumulating increasingly complex information as it progresses from low-level object representations to high-level edges and textures. The comparable image data is repeated numerous times using filters to create a feature map. CNN retrieves essential

Samples of skin diseases (a) Athlete-Foot, (b) Cellulitis, (c) Chicken Pox, (d) Cutaneous larva migrans, (e) Impetigo, (f) Nail fungus, (g) ringworm, (h) Shingles [6].

traits from the images when hundreds of features need to be collected and returned. Due to these characteristics, a CNN model is highly recommended for image classification. CNN models come in a variety of architectures, each with distinctive characteristics, advantages, and disadvantages. CNN architectures are capable of automatically recognizing features in the input data to handle challenging issues. They acquire the predicted information to find and explore the features in the inaccessible patterns of data, resulting in notable effectiveness due to the low computational cost [8, 9]. This is the main logic behind why many researchers are considering using a deep learning model to classify concerns related to skin diseases. The following are the disadvantages of conventional dermatological diagnosis:

- There are not many dermatologists with advanced training in skin care due to the limited availability of medical resources. The discrepancy between the growth rate of dermatologists and the prevalence of skin disorders means that many patients lack access to sufficient numbers of qualified dermatologists.

- Professional dermatologists have varying professional experiences and opinions, resulting in distinct diagnoses for the same patient due to inadequate diagnostic accuracy. Light, tiredness, and other circumstances can influence the diagnostic results for the same skin disease. Moreover, different features of skin illnesses create discrepancies within groups of images of the disease, leading to vague or inaccurate diagnoses.

This study utilized a dataset that includes various skin conditions caused by different types of pathogens, such as viruses and fungi, which are present on the skin. If there are a large number of them, the immune system is unable to manage them, which results in infection or disease. The dataset consists of the following classes: parasitic infections (cutaneous larva migrans), fungal infections (nail fungus, ringworm, athlete's foot), bacterial infections (cellulitis, impetigo), and viral skin infections (chickenpox, shingles).

The primary objective of this research is to utilize the EfficientNetB0, ResNet50, InceptionV3, and VGG16 models of CNN architecture, which are well-suited for image recognition tasks, to identify skin disorders from the selected dataset. Due to its high accuracy and parameter efficiency, EfficientNetB0 stood out, while ResNet50 and InceptionV3 also performed well. The following are the main contributions of this anticipated study:

- The research presents a thorough comparative analysis of four well-known deep learning architectures, EfficientNetB0, ResNet50, InceptionV3, and VGG16, for skin disease classification.

- Usage of the Adam optimizer on the skin-disease-dataset from Kaggle, with a batch size of 32 and 20 epochs, yields the most efficient output.

The model's performance is assessed using performance metrics, such as accuracy, precision, recall, and F1-score.

2. BACKGROUND AND RELATED WORKS

The most common form of ailment in humans is skin disease. The principal causes of skin diseases are fungi, viruses, or bacteria. Dermatologists play a crucial role in diagnosing skin diseases that are recognizable by their external manifestations. Since it was difficult to detect skin diseases manually, computer-aided methods were developed. As a result, many researchers have put in effort to identify skin lesions using various artificial intelligence techniques. Traditional approaches have been replaced by ML and DL models for the discovery of many kinds of skin diseases in order to get beyond the constraints of manual diagnostic systems [10]. According to Malik et al. [11], a CNN-based EfficientNet-B2 model was used in the DermNet NZ Image Library to distinguish between benign and malignant skin lesions. Despite the model's 89.55% accuracy, the authors suggested it as a starting point for further study, recognizing that complex scenarios require improvement. After that, the acquired attributes were used to train SVM classifiers. Anitha et al. and Kumar et al. discussed how skin diseases can be classified through appropriate image processing techniques. Skin illnesses are categorized using morphological methods for skin detection. Choosing the ideal threshold value is essential, as morphological opening, closing, erosion, and dilation essentially depend on the binary image created by thresholding [12, 13]. Based on the texture of the image, morphologically based procedures may not be suitable for predicting the broadening of the affected region. In 2014, Yasir et al. emphasized the use of different image processing algorithms for extracting features and machine learning algorithms. The algorithms underwent training and evaluation using artificial neural networks. They first pre-processed the skin photos to uncover certain characteristics before determining the type of disease. Eight image processing algorithms were applied, namely YCbCr, grayscale picture, histogram, binary mask, smooth filter, median filter, and Sobel operator. Ten unique characteristics were chosen for the data modelling process [14]. With their help, the precision for supervised, unsupervised, and semi-supervised systems was 90%, 85%, and 88%, respectively. In 2019, the Gradient and Feature Adaptive Contour (GFAC) approach was introduced by Sreelatha et al. [15] for delineating lesion boundaries and recognizing early melanoma. To extract effective features and achieve precise segmentation, numerous Gaussian-distributed patterns were utilized. The proposed GFAC model had finer boundaries and did not contain noise.

In contrast, Mohammed et al. recommended a unified system capable of diagnosing the boundaries of cutaneous lesions from complete dermoscopy images through segmentation, which comprises a stage for categorizing multiple skin lesions. Initially, the cutaneous lesion boundaries are broken down using a high-resolution deep learning convolutional network, and a neural network classifier is applied for classification [16]. CNN has issues generating accurate discriminations because skin lesions differ in size. By choosing as many sites as possible surrounding the lesion, Liu et al. [17] created the multiscale ensemble of CNN, or MECNN. The MECNN approach is used to narrow down the search space of a region of interest.

In 2021, Khan et al. presented a technique called High-Frequency with a Multi-layered Feed-Forward Neural Network (HFaFFNN) that aggregates all images and enhances them by applying an activation function derived from logarithmic opening [18]. The already trained CNNs, namely NasNet-mobile and Darknet-53, were put into use, and their parameters were tuned for optimal performance. Ultimately, the gathered features were merged using a Parallel Max Entropy Correlation (PMEC) approach [18]. The primary advantage of this method is that it combines a significant number of related features and regulates the length of the feature vector. Nevertheless, a small number of redundant and unnecessary characteristics are additionally included, which inaccurately characterizes the final classification accuracy. As a result, a hybrid feature optimization technique is applied. A proposed hybrid model is chosen for its best features, and to classify the results, a SoftMax classifier is used.

Additionally, in the same year, Muhammad et al. described a reliable method for detecting skin lesions across various stages. Firstly, Local Color-controlled Histogram Intensity Values (LCcHIV) were employed to improve images. Secondly, a cutting-edge deep saliency method was used for segmentation. The binary images were obtained by utilizing the threshold function. Lastly, the enhanced moth flame optimization algorithm was employed to obtain the most significant characteristics. Ultimately, the system was able to identify skin lesions with remarkable precision by incorporating additional features and classifying the lesions using a kernel deep learning model [19].

Incorporating several prediction models yields a higher level of performance when classifying skin disorders using ensemble models [20]. The findings demonstrate that when these 5 different data mining techniques are applied in this assemblage method, dermatological prediction yields greater accuracy than a single classifier. Whereas overfitting is an issue with ensemble models, because they are unable to adjust for unknown variations in the population and the sample under investigation [21, 22]. The deep neural network-based classification of skin diseases demonstrated a remarkable level of performance. However, the model's inappropriateness for multi-lesion images has been established by experimental investigations. An enormous amount of training is required to attain a satisfactory level of accuracy in deep neural network models, which in turn takes extra computational time [23, 24]. Skin illness categorization also utilizes basic image processing techniques, including morphological processes for skin recognition [23, 24]. Therefore, determining an appropriate threshold value is of utmost importance, as morphological closing, opening, erosion, and dilation primarily depend on the binary image produced through thresholding. Based on the surface characteristics of the image, the morphologically based procedures might not be suitable for determining the growth of the damaged region. A method for categorizing skin conditions has been developed by Genetic Algorithms (GA) [25, 26]. One of the problems with the genetic algorithm is that it typically takes a prolonged time to conclude [27, 28]. Also, the global optimal solution, which would not produce an achievable result, is never provided by the model [29]. Table 1 presents a literature survey that provides an in-depth review of all the research conducted to date on the topic of classifying skin diseases.

| Ref./Year | Disease | Network Architecture | Dataset Name | Performance Metric | Findings |

|---|---|---|---|---|---|

| [30]/ 2024 |

Melanoma | Region-based CNN based on iteration-controlled Newton-Raphson Method | ISIC 2016 ISBI 2017 |

ISIC 2016-94.5% accuracy ISBI 2017-93.40% accuracy |

The technique performs faster and more accurately than current approaches. |

| [11]/ 2024 |

Benign, Malignant, Basal Cell Carcinoma | Proposed CNN (7 Conv layers) | ISIC | ISIC-87.64% accuracy | A lightweight CNN model achieved high performance with data augmentation. |

| [31]/ 2021 |

Skin diseases are classified into seven different classes | MobileNetV2 and LSTM | HAM1000 | HAM 1000-85% accuracy | The proposed system efficiently diagnoses skin conditions and reduces future complications. |

| [16]/ 2020 |

Skin Cancer | Full resolution CNN (FrCNN) | ISIC 2016 ISIC 2017 ISIC 2018 |

ISIC 2016 showed 81.29%,81.57% and 73.44%, ISIC 2017 shows 88.05%,89.28%,87.74% while ISIC 2018 shows 88.70% accuracy for 7 classes of ISIC |

Superior performance of ResNet50 was demonstrated. |

| [32]/ 2020 |

Melanoma | Googlenet, ResNet101, NasNet-Large | PH2 ISIC 2019 |

PH2 95% ISIC 2019- 93% accuracy |

Greater accuracy values were obtained for the segmentation process of lesions without classification |

| [33]/ 2020 |

Skin Diseases (benign or malignant melanoma, healthy, eczema, acne) |

SV (ECOC) utilising SVM and a deep convolutional neural network | AlexNet | AlexNet-86.21% accuracy | CNN-derived features can help increase the classification accuracy of various skin lesions. |

| [34]/ 2023 |

Acne, Psoriasis, Eczema, Rosacea, Vitiligo, Urticaria | CNN/DCNN, Ensemble, MLP, ANN | Mostly private datasets, some public | Accuracy, Sensitivity, Specificity, AUC, PPV, NPV | DL algorithms achieved high accuracy (e.g., Acne: 94%, Eczema: 93%), high specificity, but variable sensitivity, and limited external validation. |

| [33]/ 2020 |

Melanoma | Multiclass Multilevel Classification Algorithm | Self-generated data | 96.47% accuracy | For the creation of expert systems that are mobile-enabled, it may be possible to expand the multiclass skin lesion categorization by adding other diseases. |

| [3]/ 2021 |

Skin Cancer | ANN and FFNN | ISIC 2018 PH2 |

ISIC 2018- 90% PH2-95.8% accuracy |

The ANN and FFNN algorithms performed better than the ResNet50 and AlexNet methods. |

| [35]/ 2024 |

Melanoma & other skin lesions | Proposed CNN architecture with batch normalization and ReLU | DermNet, HAM10000 | Accuracy, Sensitivity, Specificity, AUC | The proposed model outperformed the baseline CNN with improved AUC and specificity, demonstrating its suitability for melanoma detection. |

| [36]/ 2020 |

Skin Cancer | CNN model with the K-Nearest Neighbor algorithm. | ISIC | ISIC- 90% accuracy | The dataset is not suitable for coarse multiclass models; however, it is well-suited for linear binary classifiers. |

| [37]/ 2023 |

Skin Cancer | VGG16, ResUNet and InceptionV3 | ISIC | ISIC-93.7% accuracy | The proposed approach classifies skin cancer, yielding good outcomes compared to other modern methods. |

| [38]/ 2023 |

Skin Cancer | VGG16 | Self-generated datasets | 84.242% accuracy | VGG16 is an efficient model that performs well on training and validation datasets when it comes to accurately categorising skin cancer images. |

| [39]/ 2021 |

Skin Cancer | VGG16 and CNN | HAM10000 | 84.87% accuracy | The developed model has high predictive capability |

| [40]/ 2022 |

Skin Tumors | VGG16, Xception and ResNet50 | HAM10000 | 88% accuracy | VGG16 exhibits the best overall performance when a balanced dataset is used. |

| [41]/ 2021 |

Skin Cancer | VGG16, SVM, ResNet50 | Skin Cancer (Benign vs. Malignant) from the Kaggle dataset | VGG16 93.18%, ResNet50 84.39%, SVM 83.48%, Accuracy |

Precision increases when the number of parameters increases. |

| [42]/ 2022 |

Skin Cancer | VGG-16, ResNet50, ResNetX, InceptionV3 and MobileNet | ImageNet Large Scale Visual Recognition Challenge (ILSVRC) dataset | 99.87% accuracy | The recommended structure may assist practitioners and dermatologists with less experience categorising different skin lesions. |

| [43]/ 2022 |

Skin Cancer | Hybrid TransUNet | ISIC 2018 | 92.11% Accuracy | Hybrid TransUNet outperforms U-Net and other attention-based methods. |

| [44] 2025 | Skin Disease | Hybrid Model | Skin disease dataset | 97.57% Accuracy | The Hybrid Model outperforms SOTA methods. |

| [45] 2025 | skin lesion | ResNet50 | Monkeypox Image Data | 96% accuracy | The enhanced model performed excellently in identifying Monkeypox diseases |

Deep learning algorithms, namely VGG 16 and ResNet50, outperformed machine learning algorithms in detecting skin diseases, as observed. Numerous researchers have been interested in applying image recognition-based deep learning models for the diagnosis of skin conditions.

3. MATERIALS AND METHODS

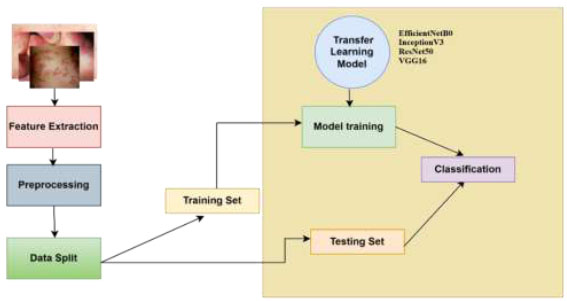

Artificial neural networks have already been extensively applied in medical imaging. CNNs (Convolutional Neural Networks), in particular, or deep networks, are a unique class of neural networks that show remarkable results in automatic feature extraction and classification. A dataset is used to identify the CNN architecture that performs most effectively in classifying images among EfficientNetB0, InceptionV3, VGG16, and ResNet50.The training dataset used here is a diverse set of dermatological images carefully selected to encompass a wide spectrum of skin ailments. The suggested architecture model in Fig. (2) uses a typical deep learning technique as a computational method for classifying skin illnesses. It undergoes various phases and gathers understanding based on a predetermined collection of traits. These steps include the following: (1) pre-processing, (2) transfer learning models, namely, EfficientNetB0, Inception V3, VGG16, and ResNet50, and (3) classification.

Workflow of the proposed methodology.

3.1. Pre-processing

Data collection and preparation are usually the primary steps in skin disease classification, as they ensure a broad dataset of images of skin lesions with accompanying labels. To meet the CNN architecture's input requirements, the images are subsequently scaled to a fixed input size of 224 x 224 pixels. To scale pixel data to a common scale and accelerate convergence, pretreatment also includes normalization using model-specific preprocessing routines. Moreover, to ensure that classes are evenly represented in training and validation sets, a stratified split is employed. Additionally, to overcome data imbalance during training, class weighting is used.

3.2. Transfer Learning Models

To improve pre-trained CNN models (trained on ImageNet) for the task of skin disease classification, this work employs transfer learning. The base models (EfficientNetB0, InceptionV3, VGG16, and ResNet50) are loaded without their top layers, and then custom classification heads, consisting of GlobalAveragePooling, Dropout, and Dense layers, are appended to them. The pre-learned hierarchical characteristics of the base models are used to retrain only the top layers. This offers good performance on the dermatological dataset while significantly reducing training time and computing costs.

• Classification: The network's deep convolutional layers are used in the final step to extract important characteristics. These features are then propagated through fully connected layers and a softmax classifier to generate probabilities for every disease class. The anticipated label is the class with the highest output probability. To obtain comprehensive performance knowledge, accuracy, precision, recall, and F1-score are utilized. Training curves, sample prediction, and confusion matrix visualization facilitate the evaluation.

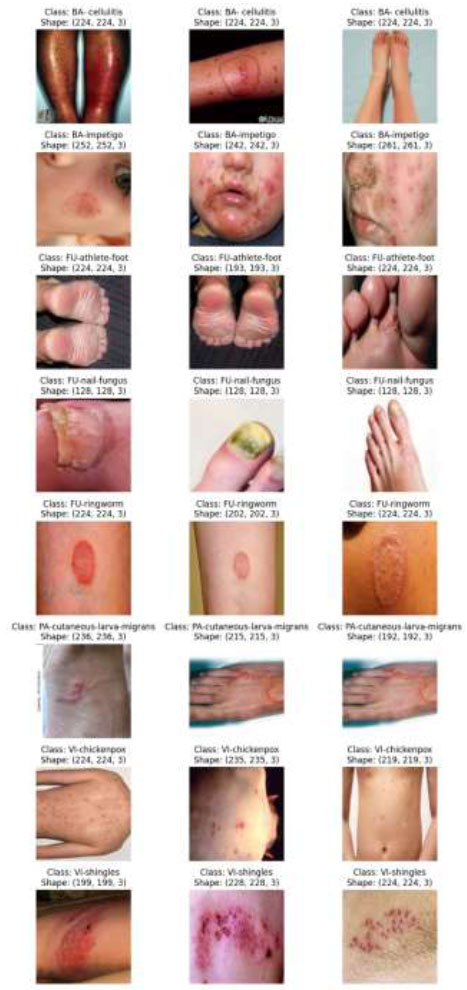

3.3. Dataset Description

The Skin-Disease-Dataset, comprising 1159 total images, is from Kaggle [44]. The data is used to create separate training and test datasets, upon which the accuracy of CNN models is evaluated. The length of the training data in the dataset is 925, and the length of the test data is 234. Eighty percent of the data is used for training, while twenty percent is used for testing. Table 2 depicts the count of training dataset images and test image dataset in a tabular way.

The dataset consists of four categories: viral, fungal, parasitic, and bacterial infections, which are categorized into 8 classes: cellulitis, athlete's foot, ringworm, chickenpox, impetigo, nail fungus, cutaneous larva migrans, and shingles.

A number of exemplary images from the training set are displayed in Fig. (3), each of which has been rigorously annotated to outline the distinguishing traits and symptoms of distinct dermatoses.

| S. No. | Disease Name | Infection Type | Training Data | Testing Data |

|---|---|---|---|---|

| 1. | Cellulitis | Bacterial | 136 | 34 |

| 2. | Shingles | Viral | 130 | 33 |

| 3. | Athlete-foot | Fungal | 124 | 32 |

| 4. | Cutaneous-larva-migrans | Parasitic | 100 | 25 |

| 5. | Ringworm | Fungal | 90 | 23 |

| 6. | Nail-fungus | Fungal | 129 | 33 |

| 7. | Chickenpox | Viral | 136 | 34 |

| 8. | Impetigo | Bacterial | 80 | 20 |

Images of the training dataset belonging to different categories [46].

The test dataset used here is a carefully curated set of dermatology photos that assesses the effectiveness and generalizability of the proposed machine learning model across a wide range of skin diseases. A selection of photos from the test set, each representing a different dermatosis seen in clinical practice, is displayed in Fig. (4). These photos have been thoroughly annotated by professional dermatologists to provide ground-truth labels for performance assessment.

Images of the test dataset belonging to different classes [46].

3.4. Transfer Learning Models

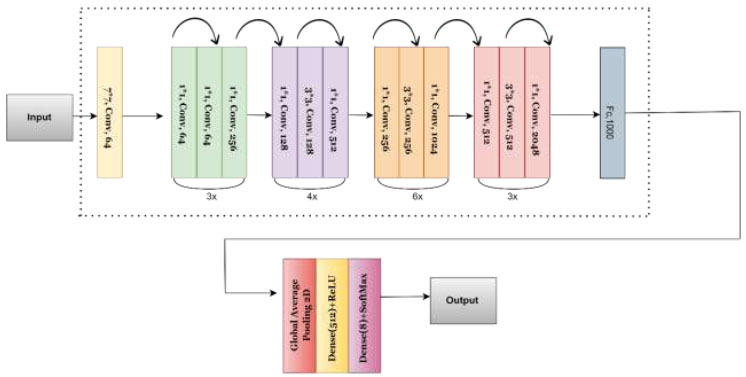

Convolutional neural networks are deep learning techniques utilised in image and signal processing, among other applications, to classify objects, find patterns, and identify fields of interest. The following paper proposes two CNN architectural models: ResNet50 and VGG16. These two models are chosen because of their popularity and benchmark performance across various image classification tasks. Moreover, they possess transfer learning capabilities, allowing the learned features from the general image recognition task to be fine-tuned for skin disease classification. Transfer learning is especially useful when dealing with small medical imaging datasets, as it enhances both model performance and convergence rate. Next, a prediction classifier is linked with these two CNN architectural models. A 50-layer CNN, named ResNet50, consists of one maxpool layer, one average pool layer, and 48 convolutional layers. In addition, VGG16 is a 16-layer CNN model with 13 convolutional layers and 3 fully connected layers [45, 46].

4. RESNET50

One of the most common CNN architectures, known as ResNet50, is an integral part of the ResNet (Residual Networks) family, which consists of a collection of models created to combat the problems encountered with deep neural network training. There are numerous ResNet architectures available in various dimensions, including ResNet-18, ResNet-32, and others. A mid-sized version of the architecture, called ResNet50, features a residual link and provides the network with knowledge of an ensemble of residual functions that translate the input into the expected output [47-49]. With these links, the network can gain deeper depth structures without encountering the issue of vanishing gradients. This architecture consists of two fundamental design components. First, the size of the output feature map determines the number of filters each layer has. Second, to maintain temporal complexity, twice as many filters are added when the size of the feature map is halved. The identity block, the convolutional block, the fully connected layers, and the convolutional layers are the four key components of the ResNet50 architecture. The identity block and the convolutional block receive and transform the operations chosen by the convolutional layers from the input image. The fully connected layers are used to determine the final classification. Batch normalization and ReLU activation function are options after a stack of convolution layers. The max pooling layer effectively shrinks the spatial dimensions of the feature maps but preserves the most critical features from the convolutional layers. The identity block and the convolutional block are the two primary building blocks of ResNet50. The input is summed back to the output by the identity block after it has passed through several convolution layers. So the system can learn residual functions that transform input into output. To proceed with the 3x3 convolutional layer using fewer numbers of filters, the convolution block includes a 1x1 convolutional layer, which mimics the identity block. The final component of ResNet50 consists of the fully connected layers. The final classification is complete, allowing the output of the final fully connected layer to be used as input to the softmax activation function. (Fig. 5) illustrates the architecture of the proposed ResNet50, providing a comprehensive overview. First, a layer called “global average pooling” is introduced. This layer processes the average value of each feature map over every single spatial area, decreasing the result's spatial aspects to a fixed size. The ReLU activation function is used to add non-linearity to the model when the output from the GlobalAveragePooling2D layer is coupled to a dense layer of 512 units that is fully connected. Lastly, a second fully connected dense layer of eight units is connected to the output of the dense 512-unit layer. The SoftMax activation function is then used to determine the predicted probability for each of the eight classes. By ensuring that the resultant probabilities sum to one, the dense layer is suitable for tasks involving multiple classes of categorization.

Proposed architecture of the ResNet-50 model.

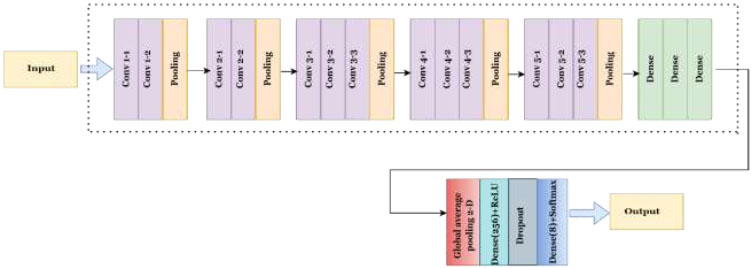

4.1. VGG-16

The Visual Geometry Group at the University of Oxford presented the VGG convolutional neural network design. The VGG16 approach, based on object classification and identification, can accurately categorize 1000 images into 1000 separate groups. It serves as one of the most straightforward and efficient methods to categorize images, promoting transfer learning. Each of the three fully connected layers and thirteen convolutional layers that compose VGG16's 16 layers can be recognized by its depth.

Three RGB channels make up the (224, 244) input matrix size for the VGG16. Despite having numerous hyperparameters, the most remarkable aspect of VGG16 is its 3x3 filter with a stride 1 convolution layer. Additionally, a 2x2 filter with a stride of 2 always employs its max pool layer and padding. The same arrangement of convolutional layers and max pooling layers is maintained throughout this architecture. Conv-1 layer has 64 filters, Conv-2 layer has 128 filters, Conv-4 and Conv-5 have 512 filters, and 256 filters for Conv-3. Three Fully Connected (FC) layers follow a stack of convolutional layers. Each of the first two layers contains 4096 channels, and the third layer employs 1000-way ILSVRC classification to produce 1000 channels (one per class). The soft-max layer is referred to as the topmost layer. Known for its simplicity and efficiency, VGG-16 performs well in a range of computer vision tasks, such as image classification and object detection [40, 41]. A model's structure consists of a stack of convolutional layers followed by a series of increasingly complex max-pooling layers.

The suggested VGG-16 structure is represented in Fig. (6). Adding a GlobalAveragePooling2D layer reduces the spatial size of the feature maps produced by the default model. After that, a dense layer of 256 units and an activation function, i.e., ReLU, is added. To prevent overfitting, a dropout layer with a dropout rate of 0.5 is added. Finally, a dense layer is appended having a softmax activation function and num_classes units for multi-class classification.

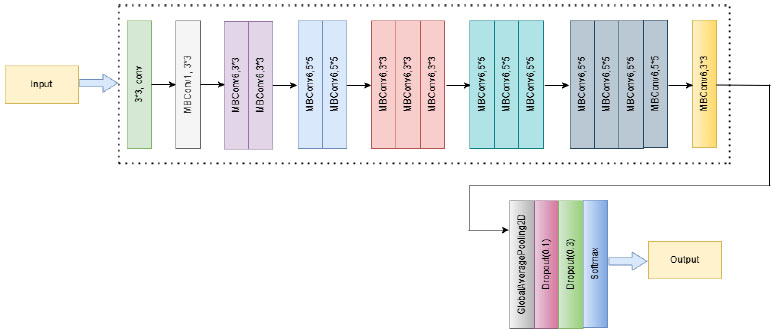

4.2. EFFICIENTNETB0

The EfficientNetB0 convolutional neural network model achieves high accuracy and efficiency in image classification by balancing the depth, width, and resolution of inputs with the aid of compound scaling [47, 50]. A 3x3 convolution layer precedes the structure before successively repeating the mobile inverted bottleneck (MBConv) blocks, which deepen the feature representation using depthwise separable convolutions and squeeze-and-excitation modules. To systematically extract complex features from input photos, these blocks are duplicated and have varying kernel sizes (3×3 and 5×5). The network concludes with a fully linked softmax output layer, global average pooling, and a 1x1 convolution. In this study, EfficientNetB0 was used with pre-trained ImageNet weights, and the classification head was customized using dense and dropout layers to adapt to the categorization of skin diseases. All layers were thawed to enable end-to-end fine-tuning on the domain-specific dataset, thereby balancing the model's accuracy with its computational cost.

The EfficientNetB0 model serves as a pre-trained backbone for feature extraction in the proposed methodology, as shown in Fig. (7). The addition of a GlobalAveragePooling2D layer reduces the spatial size of the high-level feature maps generated by the basic model, allowing it to be tuned to the specific challenge of multi-class skin disease classification. A dense layer of 128 units, which permits non-linear processing of retrieved features, is then activated by the ReLU function. To avoid overfitting, a dropout layer with a rate of 0.3 is included. Lastly, the output is classified into pre-established skin disease categories using a dense layer with a softmax activation function.

Proposed architecture of the VGG16 model.

Proposed architecture of the EfficientNetB0 model.

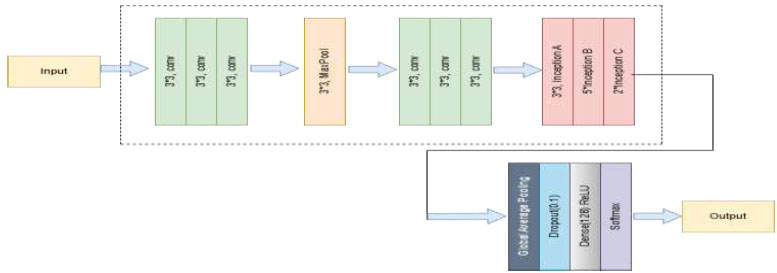

4.3. INCEPTIONV3

Google developed the deep convolutional neural network structure known as InceptionV3, which aims to achieve high classification accuracy while optimising processing efficiency [37, 40]. In order for the network to extract different characteristics at different sizes, InceptionV3 presents the concept of Inception modules, which include performing multiple convolutions (1×1, 3×3, and 5×5) in parallel within a single layer. To improve speed and regularisation, InceptionV3 also makes use of batch normalisation, auxiliary classifiers, and factorized convolutions. Using its pre-trained weights on the ImageNet dataset, the InceptionV3 network is employed as a feature extractor in the method, as shown in Fig. (8). To adapt it for the classification of skin diseases, its last fully connected layers from the basic model are eliminated. To reduce the spatial size of the feature maps, a Global Average Pooling 2D layer is introduced. A 128-unit dense layer and ReLU activation come next, enabling non-linear transformation and enhancing feature representation. To counteract overfitting, a Dropout layer is then applied at a rate of 0.3. Finally, for the multi-class classification of dermatoses, a dense output layer with softmax activation is incorporated. This optimized InceptionV3 model provides strong performance in medical image analysis applications, striking a balance between depth and processing costs.

Proposed architecture of the InceptionV3 model.

5. PERFORMANCE METRICS

The proposed model has been subjected to experimental analysis and performance evaluation using the Eqs. (1-4) that compute recall, precision, accuracy, and F1-score. Table 3 provides an example of the terms used to gauge the performance metrics.

| Term | Meaning |

|---|---|

| True Positive (TP) | Accurately identifying a sickness that a person possesses. |

| True Negative (TN) | Accurately identifying that a person does not have a sickness. |

| False Positive (FP) | Misidentifying an individual as having an illness when they do not. |

| False Negative (FN) | Incorrectly identifying a person as not having a sickness when they actually have one. |

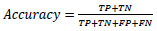

5.1. Accuracy

Accuracy refers to the number of samples identified correctly divided by the total number of samples in the dataset.

|

(1) |

5.2. Recall

Recall is the percentage of all correctly detected positive samples that are suitably recognised from the dataset.

|

(2) |

5.3. Precision

Precision refers to the proportion of correctly identified positive samples among the total number of predicted positive samples.

|

(3) |

5.4. F1 Score

F1-Score is calculated as the weighted average of recall and precision combined into a single value.

|

(4) |

Comparing a classification model's performance solely on the basis of accuracy may be inaccurate, especially in cases where there is a significant class imbalance. Thus, apart from accuracy, other performance measures, such as recall, precision, and F1-score, are employed to evaluate the classifier.

6. RESULTS AND DISCUSSION

This section examines the results obtained from the Skin-Disease dataset on Kaggle using two preconditioned networks, specifically VGG-16 and ResNet-50. A total of 1,159 images have been used to generate the training and test datasets, which comprise 925 training images and 234 test images, respectively.

6.1. Results for Resnet 50 Model

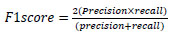

The performance and generalization capacities of the skin disease classification model are evaluated through the tracking of validation and training losses during the training phase.

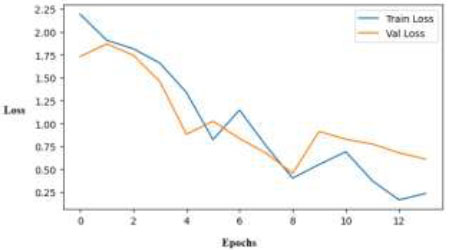

The training and validation losses of the ResNet50 model during eight epochs are displayed in Fig. (9). The model rapidly identifies patterns from the training data, as evidenced by the steep decline in training loss during the early epochs. However, after the second epoch, the validation loss remains relatively constant, varying somewhat, which suggests that the model may be overfitting.

Training and validation loss curves over epochs for the ResNet50 model.

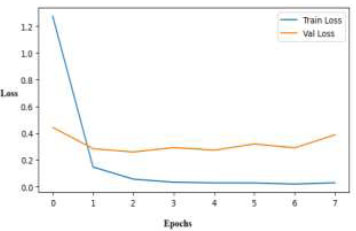

Training and validation accuracy curves across epochs for the ResNet50 model.

Both validation and training accuracies are employed to train the skin disease classification model, allowing for the tracking of the model's evolution and its propensity to extend to unknown data. (Fig. 10) displays the training accuracy over a period of 7 epochs, where one epoch is a complete cycle of the training dataset. The y-axis represents the training accuracy scores, and the x-axis indicates the number of epochs. In contrast to the validation accuracy, which is trending at a high 93–94%, this ResNet50 model accuracy graph shows a sudden increase in training accuracy, approaching 100%. This indicates good performance, but it also suggests that there may not be much overfitting after the first few epochs.

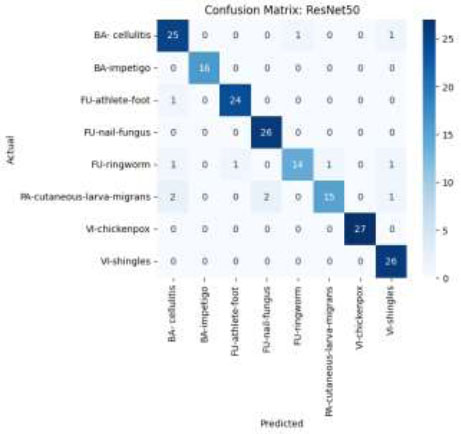

The confusion matrix, as presented in Fig. (11), provides a comprehensive overview of the results of the classification of the ResNet50 model. Each column of the matrix indicates the predicted class labels, while each row indicates the true class labels. Although there are a few minor misclassifications across related classes, such as cellulitis and impetigo, the model performs well and consistently across a range of dermatological disorders.

Confusion matrix using the ResNet50 model.

The pre-trained ResNet50 model's convolutional layers are first frozen to preserve the learned features, and then the final classification layers are modified or swapped out to meet the demands of the specific skin disease classification challenge. This method is particularly useful when dealing with small datasets, as it significantly reduces the need for large amounts of labeled data and computational power. Through fine-tuning or feature extraction, the ResNet50 model can accurately classify skin diseases by capturing intricate patterns and textures in dermatological images, ultimately aiding in early diagnosis and treatment.

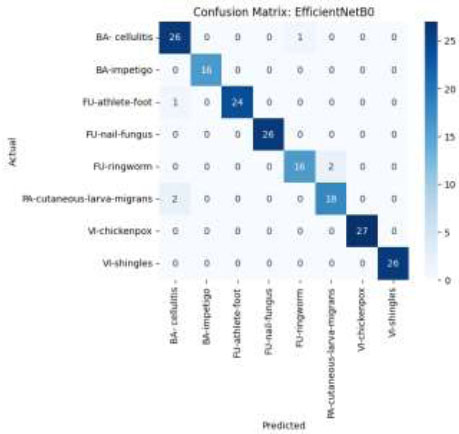

6.2. Results for VGG-16 Model

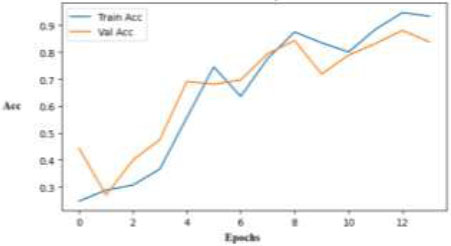

The validation and training losses using the ResNet50 model over 12 epochs are depicted in Fig. (12). Effective learning is demonstrated by the VGG16 loss graph, which shows a uniformly declining trend of training and validation losses. Variable validation loss after epoch 6 indicates slight overfitting or sensitivity to particular data points. Overall convergence, however, indicates consistent model performance.

Fig. (13) shows a learning curve of VGG-16, which is even. There is an outstanding generalization as validation accuracy surpasses training accuracy. As training goes on, both accuracies rise with different oscillations until they surpass 90%, confirming the model's capacity to pick up complex patterns of skin diseases over time.

Training and validation loss curves across epochs for the VGG-16 model.

Training and validation accuracy curve across epochs for the VGG-16 model.

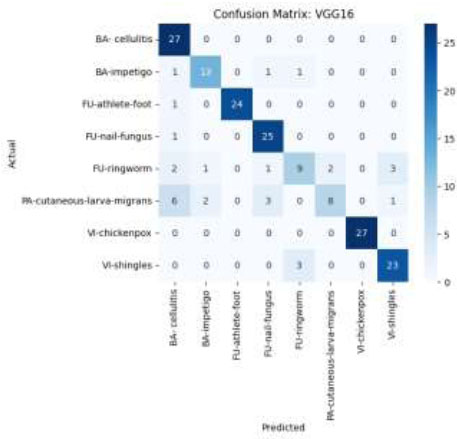

The confusion matrix of the classification using this model, depicted in Fig. (14), summarizes the overall performance of the VGG-16 model. Classifications like “BA-cellulitis,” “FU-athlete-foot,” and “FU-nail-fungus” are accurately predicted by VGG16's confusion matrix, with few misclassifications. However, some classifications, such as “PA-cutaneous-larva-migrans” and “FU-ringworm,” show more confusion among comparable classes, which suggests that VGG16 is unable to distinguish between dermatoses that are visually similar, especially when inter-class similarity is high or visual signals are weak.

With its 16 layers and straightforward, standardized architecture, VGG16 learns hierarchical characteristics from a large dataset, such as ImageNet. To conserve the learned features, the convolutional layers of the pre-trained VGG16 model are first retained, and then the final classification layers are modified or replaced to suit the specific skin disease classification problem. This strategy significantly reduces the need for extensive labeled data and computational resources, making it particularly advantageous when working with limited datasets. By fine-tuning or extracting features, VGG16 can effectively discern various skin diseases by capturing intricate patterns and textures in dermatological images, thereby facilitating prompt diagnosis and treatment.

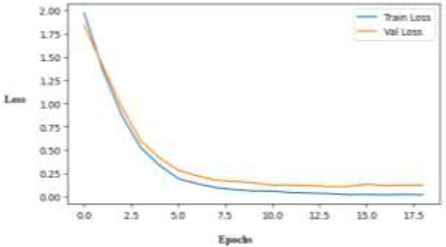

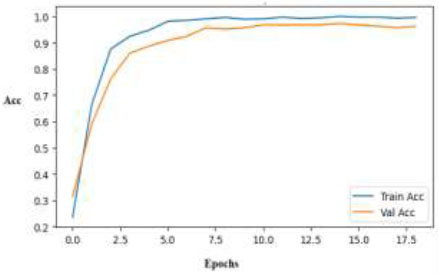

7. RESULTS FOR EFFICIENTNETB0 MODEL

The EfficientNetB0 results demonstrate its superior performance in skin disease classification compared to other models in all evaluation metrics. Its learning curves indicate stable training with minimal overfitting, suggesting that it is a reliable option for this multi-class classification problem. Over the course of 18 epochs, the loss curve depicted in Fig. (15) exhibits steady and smooth convergence for both the training and validation sets. The model's strong generalization ability on unknown data is demonstrated by the narrowing gap and low end-loss values, which verify that it learns efficiently without experiencing significant overfitting.

Confusion matrix using the VGG-16 model.

Training and validation loss curves of the EfficientNetB0 model.

The training and validation accuracy of the Efficient NetB0 model increases rapidly and steadily, approaching 100% values in a few epochs, as depicted in Fig. (16). The model's good performance on the skin disease dataset is demonstrated by the narrow gap between the curves, which shows excellent generalization and minimal overfitting.

The confusion matrix of EfficientNetB0 demonstrates outstanding classification capabilities, with nearly flawless predictions across all skin disease categories, as shown in Fig. (17). There is minimal misclassification in all classes, especially in VI-chickenpox, VI-shingles, and FU-nail-fungus, which achieve full or nearly full accuracy. This implies that EfficientNetB0 can differentiate subtle visual characteristics in conditions related to the skin.

Training and validation accuracy curves for the EfficientNetB0 model.

Confusion matrix using the EfficientNetB0 model.

Out of all the models tested, the EfficientNetB0 model performs exceptionally well in classifying skin diseases with the highest accuracy. Its compound scaling method produces reliable and accurate predictions by enabling effective feature learning with fewer parameters. The model's exceptional generalization capacity is demonstrated by its consistently high training and validation scores, making it a suitable option for real-world dermatological diagnostic practice.

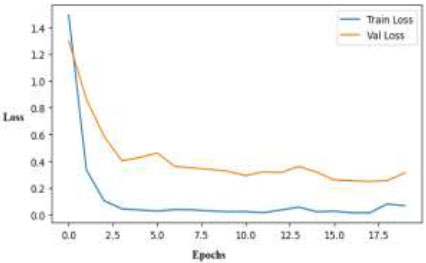

7.1. Results for the InceptionV3 Model

The deep hierarchical structure and multi-scale feature learning capacity in skin disease classification are leveraged using InceptionV3. InceptionV3, renowned for striking a balance between accuracy and efficiency, has been modified for the dataset to assess its ability to identify complex dermatological patterns and enhance classification accuracy across various disease classes. The training loss curve of the InceptionV3 model, as depicted in Fig. (18), demonstrates robust learning with a sharp decline in training loss during the first few epochs, culminating in a very low value. The model learns the training data extremely well; however, there is still a generalization gap due to a lack of data variety, even though the validation loss initially decreases and then becomes constant at a higher level.

Training and validation loss curves for the InceptionV3 model.

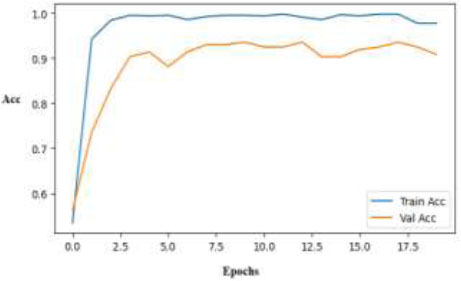

In the early phases of training, the InceptionV3 training accuracy plot exhibits sharp convergence and a rapid approach to near-perfect accuracy, as illustrated in Fig. (19). Additionally, the validation accuracy increases significantly and settles at 90%, demonstrating the model's strong capacity for generalization on unexplored data. This suggests that the complex architecture is capable of effective feature learning.

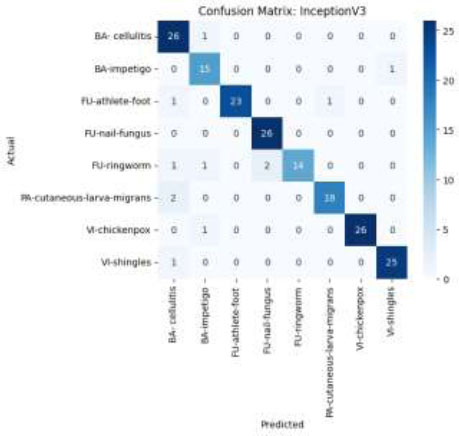

The confusion matrix of the InceptionV3 model, as shown in Fig. (20), demonstrates excellent classification performance across all eight classes of skin diseases. Correct class assignments are represented by the majority of predictions clustering along the diagonal. Although there is some confusion between categories that appear similar, such as cellulitis and athlete's foot, the model consistently performs well, with few misclassifications.

Training and validation accuracy curves for the InceptionV3 model.

Confusion matrix using the InceptionV3 model.

Thus, the InceptionV3 model's robust feature extraction and effective deep architecture are demonstrated by its results. For the classification of skin diseases, InceptionV3 is a reliable model with good levels of accuracy, precision, and recall. Its consistency in performance across different classes confirms that it can be used for challenging medical image processing tasks.

7.2. Comparison of Proposed Models

A comparison of the performance metrics for all four deep learning models in categorizing skin disorders is shown in Table 4. The assessment metrics used for evaluating the classification performance of deep learning models include recall, precision, accuracy, and F1-score.

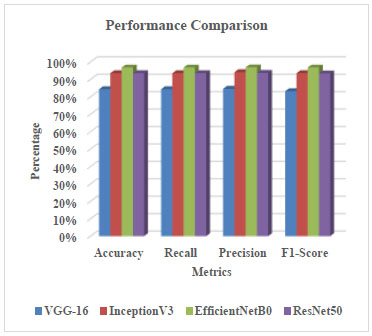

In Fig. (21), InceptionV3, EfficientNetB0, ResNet50, and VGG-16 models are graphically compared. According to the research findings, EfficientNetB0 outperformed other models like ResNet50 (93.51%), InceptionV3 (93.51%), and VGG16 (84.32%) with the highest accuracy of 96.76%, as shown in Fig. (21). EfficientNetB0 demonstrated superior generalization and stability across the diverse skin disease datasets, despite the fact that all four models had promising classification performance based on key metrics like accuracy, precision, recall, and F1-score.

| Parameter | VGG-16 | InceptionV3 | EfficientNetB0 | ResNet50 |

| Accuracy | 84.32% | 93.51% | 96.76% | 93.51% |

| Recall | 84.32% | 93.51% | 96.76% | 93.51% |

| Precision | 84.57% | 94.09% | 96.84% | 93.66% |

| F1-Score | 83.15% | 93.53% | 96.77% | 93.33% |

Comparison through evaluation metrics of different models.

This distinction highlights the impact of architectural development, as light but deeper models, such as EfficientNetB0, achieve higher accuracy at a lower computational cost, making them suitable for practical dermatological applications, particularly in clinical settings with limited resources.

CONCLUSION

The human body's biggest and most substantial organ is the skin. Four well-known deep learning models—VGG16, ResNet50, InceptionV3, and EfficientNetB0—are thoroughly evaluated in this work for their ability to classify various skin conditions using transfer learning. The models were trained using the Adam optimizer, a batch size of 32, and for 20 epochs, utilizing a publicly available Kaggle dataset on skin diseases. To guarantee consistency and model robustness, the photos underwent standardized preprocessing and augmentation. The evaluation metrics of accuracy, precision, recall, and F1-score fairly represented the model's performance across all classes. With an accuracy of 96.76%, a precision of 96.84%, and an F1-score of 96.77%, EfficientNetB0 outperformed ResNet50, InceptionV3, and VGG16 among the models tested. With accuracy rates of 93.51%, ResNet50 and InceptionV3 demonstrated identical performance, demonstrating their capacity for deep feature extraction.

On the other hand, while being less sophisticated, VGG16 demonstrated a respectable accuracy of 84.32%, highlighting its proficiency in image categorization tasks. These results support the notion that medical image processing is a better fit for more sophisticated, optimized networks, such as EfficientNetB0, especially when trained on diverse, high-resolution datasets. The findings provide further evidence of the effectiveness of transfer learning in medical imaging, particularly in dermatology, where data may be sparse or imbalanced. With significant potential for use in telemedicine and automated screening applications, the study's rigorous approach, regular training schedule, and diverse model comparison all contribute to the broader goal of integrating AI into diagnostic processes. To reduce model bias and test specificity, future studies can focus on expanding the dataset with more diverse and balanced skin condition classifications, such as benign or normal samples. Prediction accuracy could also be increased by combining multiple models or adding attention methods. Practical application would be made possible through clinical validation utilizing real-world data and the development of a mobile-optimized inference system, particularly in underserved or rural areas with limited dermatological knowledge.

AUTHORS’ CONTRIBUTIONS

The authors confirm their contributions to the paper as follows: D.G. and M.S.M: Validation; D.K.: Data collection; Y.G.: Writing - original draft preparation; M.A., A.B.S., and R.R.: Methodology; M.U.: Data curation;. All authors reviewed the results and approved the final version of the manuscript.

LIST OF ABBREVIATIONS

| ANN | = Artificial Neural Networks |

| CNN | = Convolutional Neural Networks |

| ECOC | = Error correcting output coder |

| FN | = False Negative |

| FP | = False Positive |

| FrCNN | = Full resolution Convolutional Neural Network |

| GA | = Genetic Algorithm |

| GFAC | = Gradient and Feature Adaptive Contour |

| HFaFFNN | = High-Frequency with a Multi-layered Feed-Forward Neural Network |

| ILSVRC | = ImageNet Large Scale Visual Recognition Challenge |

| LCcHIV | = Local Color-controlled Histogram Intensity Values |

| MBConv | = Mobile Inverted Bottleneck Convolution |

| MLP | = Multilayer Perceptron |

| PMEC | = Parallel Max Entropy Correlation |

| ResNet | = Residual Network |

| SVM | = Support Vector Machine |

| TN | = True Negative |

| TP | = True Positive |

| VGG | = Visual Geometry Group |

AVAILABILITY OF DATA AND MATERIAL

The data will be available from the first author upon request. However, the source of the dataset is https://www.kaggle.com/datasets/subirbiswas19/skin-disea se-dataset/data.

FUNDING

This work was financially supported by the Deanship of Scientific Research, the Vice Presidency for Graduate Studies and Scientific Research, King Faisal University, Saudi Arabia, under the project KFU250177.

ACKNOWLEDGEMENTS

The authors would like to acknowledge the support of the Deanship of Scientific Research and the Vice Presidency for Graduate Studies and Scientific Research at King Faisal University, Saudi Arabia, under the project KFU 250177.